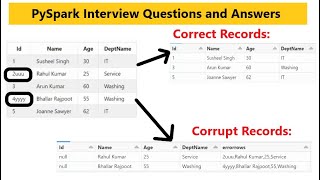

11. How to handle corrupt records in pyspark | How to load Bad Data in error file pyspark | #pyspark

Similar Tracks

12. how partition works internally in PySpark | partition by pyspark interview q & a | #pyspark

SS UNITECH

Data Caching in Apache Spark | Optimizing performance using Caching | When and when not to cache

Learning Journal

Pyspark Scenarios 18 : How to Handle Bad Data in pyspark dataframe using pyspark schema #pyspark

TechLake

5. kpmg pyspark interview question & answer | databricks scenario based interview question & answer

SS UNITECH

Explained on how to create Jobs & Different Types of Tasks in in Databricks Workflow

LetsLearnWithChinnoVino

22 Optimize Joins in Spark & Understand Bucketing for Faster joins |Sort Merge Join |Broad Cast Join

Ease With Data

32 Spark Memory Management | Why OOM Errors in Spark | Spark Unified Memory | Storage/Execution Mem

Ease With Data

PySpark | Tutorial-13 | Lazy Evaluation | Cache | Persistence | Bigdata Interview FAQ and Answers

Clever Studies

12. How to copy latest file or last modified file of ADLS folder using ADF pipeline

Azure Content : Annu